Edge Computing: How To Make It ‘The Nirvana’ for Exploding Data Glut

Smart devices are becoming more numerous – and valuable repositories of data. Denodo’s Ravi Shankar explores how a de-centralized edge computing architecture is key to unlocking fast data for huge value.

by Ravi Shankar, senior vice president, Denodo

Tags: analytics, architecture, BI, data virtualization, Denodo, devices, edge, integration, IoT,

chief marketing officer

"Despite the progress in edge devices, it remains difficult – if not impossible - to collect and analyze information from a central point."

Integration Powers Digital Transformation for APIs, Apps, Data & Cloud

Integration Powers Digital Transformation for APIs, Apps, Data & CloudDevices such as smart switches, thermostats and third-generation voice assistants are becoming more intuitive by the day. They are also becoming business-critical as they gather voluminous amounts of data daily, which they use to learn and adjust automatically.

Despite the progress in edge devices, today it remains difficult – if not impossible - to collect all of this information into one central repository, analyze that information from the central point, and then quickly push the recommendations back to the device.

But in 2020 and beyond, technology will evolve so companies can execute the compute function closer to the devices (a.k.a. at the edge). With computing at the edge, devices will improve functional efficiencies because data is processed locally and devices themselves can learn to adjust in real-time. Notably, data and insights will be faster and fresher because data transfer from devices won't be slowed by having to send it to a central location.

Devices Are Becoming Smarter – The Architectural Implication

It was not until a few years ago that traditional devices became smarter and new smart devices started emerging (such as smart voice assistant that let us ask “Alexa, how’s the weather today?” – and get a response.) .

Now, such devices have become more intelligent with the ability to adjust on the fly.

For example, Google’s Nest uses machine learning algorithms to learn when the residents are home or away on weekdays or weekends, based on daily temperature adjustments, over a few weeks’ time. With this information, Nest can adjust the temperature by itself throughout the week and weekends.

Another example is Google Maps learning about when a person goes to or from their office. After a few weeks commuting, Google Maps starts suggesting when the person should leave for the office or home, based on traffic conditions.

Centralized Analysis of Data To Drive Intelligence is Becoming Harder

Traditionally, enterprises have adopted an architecture of analyzing data and deriving intelligence from it using a centralized approach. For instance, data warehouses, the workhorse of business intelligence, are well-known central repositories that can turn raw data into insights. The process, known as ETL, extracts data from operational systems, transforms it into the appropriate format, and then loaded into the data warehouse.

For many years, this architecture has proven effective. But in the era of edge devices, traditional physical data warehouses are losing their limelight as the central source of the truth. This is because they can only store structured data as the world moves today to a flood of unstructured data.

Also, the volume of data is exploding. It has become so vast that for many use cases, it is no longer economically feasible to store all of the data in a single data warehouse.

To overcome these challenges, companies transitioned their central repository to cheaper alternatives like Hadoop, which can also store unstructured data.

Despite these evolutions, it is still impossible to collect all of the information generated across multiple devices residing in various locations across the world into one central repository that is thousands of miles away. Nor can the information be analyzed for intelligence by this central system, and then used to make recommendations all the way back to the devices for optimal performance.

Edge Computing as the Solution: Execute Compute Closer To the Data

So what’s the missing piece?

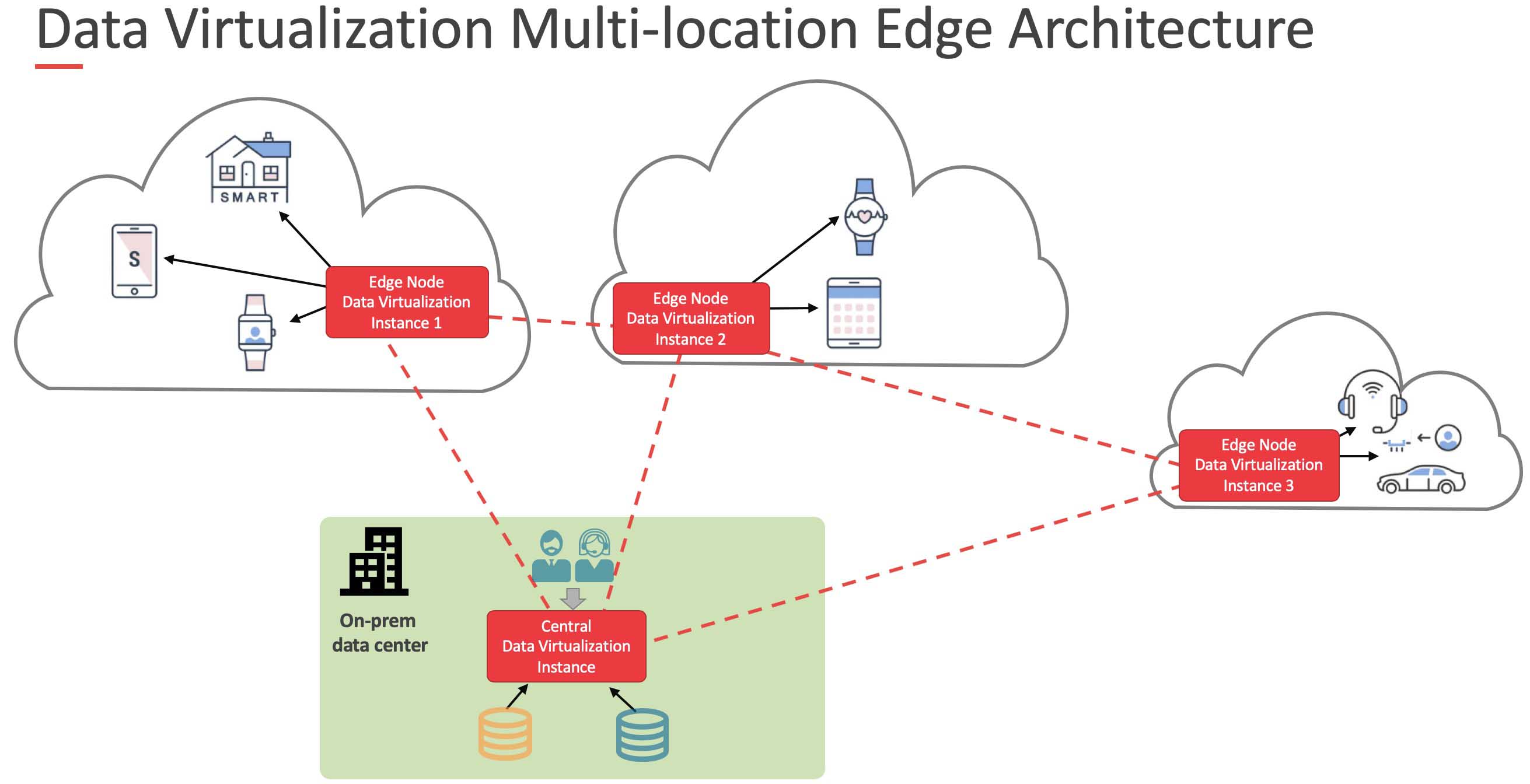

In our view, what’s missing is the technology to execute the compute function closer to the devices itself. The emergence of ‘edge computing’ architectures enables devices to send the data they generate to an edge node, a system that is closer to the devices, for analysis or computational purposes. Thus, the devices gain intelligence to meet their needs much faster from the edge node than they otherwise would when connected to a central system.

In this setup, the edge nodes are connected to the central system and they transmit only the information that is needed for the central system to analyze across all of the various devices.

As a result, there is a duality of computation where some happen at the edge nodes (to the extent it is needed for local operation). At the same time, data is also transmitted to a central analytical system to perform the holistic analysis across all of the enterprise systems.

This smart filtering of only required data opens an opportunity. That’s because the technology for processing data at the edge and transmitting only the required information to a central system is available today.

Without burdensome requirements of a high-throughput integration architecture, we’re seeing that data virtualization technologies can perform this selective data processing and deliver it in real-time without replicating the data within it.

It works like this:

As the data comes in from various devices, the data virtualization instance that sits at the edge node closer to these devices integrates the disparate data from them and extracts just the results.

It then delivers them to another instance of data virtualization that sits in the central location, closer to the data consumers, who use reporting tools to analyze the results.

So, a network of data virtualization instances, some at the edge nodes, connected to a central data virtualization instance, in a multi-location architecture, complete the edge computing framework.

Summary: Benefits of Edge Computing Enforced with a Distributed Architecture

The most significant advantage of edge computing is time savings. Over the past several years, two aspects of the technology have evolved much faster than the others—storage and compute.

Today’s mobile phones have more memory and compute power than the desktops from 30 years ago had. Yet, one aspect of the technology that has not evolved as fast as the bandwidth to transmit data, as it still takes minutes and hours for data to move from one location to another. With the devices moving farther and farther away to the cloud and across continents, it becomes imperative to transmit the minimal amount of data as possible to improve the overall efficiency.

By delegating compute to the edge, these devices will learn and adjust in real-time rather than being slowed down by the transfer of information to and from a central system.

As technology evolves, it creates a new problem that requires a new solution. With the advent of smart devices, data volume has exploded and reduced the efficacy of centralized computation and analysis. Edge computing solves this problem by making the smart devices even smarter by helping them to process their data to suffice their needs closer to them at the edge node, and then transmit only the data that is required for centralized computation.

As a result, they improve the efficiency of not only the edge devices but also that of the centralized analytical systems. Given the promise, edge computing delivers, it is poised to take off as one of the most important technology trends in 2020 and beyond.

Ravi Shankar is Senior Vice President and Chief Marketing Officer at Denodo, a leading provider of data virtualization software.

Related:

- Tray Advantage Program To Speed, Simplify AI-Powered Automation for Enterprises

- Removing Barriers to Business by Enabling Agility & Control with Ecosystem Integration

- 98% of Enterprises Struggle To Maintain, Rebuild Integrations for Key Business Apps

- ThreatX Adds API Visibility, Protection Capabilities To Defend Against Real-Time Attacks

- Visibility and Transparency are Climbing the List of C-Suite Priorities in 2022

All rights reserved © 2025 Enterprise Integration News, Inc.